W. Krichene, M. C. Bourguiba, K. Lam, and A. Bayen. On Learning How Players Learn: Estimation of Learning Dynamics in the Routing Game.

ACM Transactions on Cyber-Physical Systems - Special Issue.

bibtex

abstract

pdf

@article{krichene2018tcps,

author = {Krichene, Walid and Bourguiba, Mohamed Chedhli and Tlam, Kiet and Bayen, Alexandre},

title = {On Learning How Players Learn: Estimation of Learning Dynamics in the Routing Game},

journal = {ACM Trans. Cyber-Phys. Syst.},

issue_date = {February 2018},

volume = {2},

number = {1},

month = jan,

year = {2018},

issn = {2378-962X},

pages = {6:1--6:23},

articleno = {6},

numpages = {23},

doi = {10.1145/3078620},

acmid = {3078620},

publisher = {ACM},

address = {New York, NY, USA},

keywords = {Routing game, behavioral experiment, sequential decision model},

}

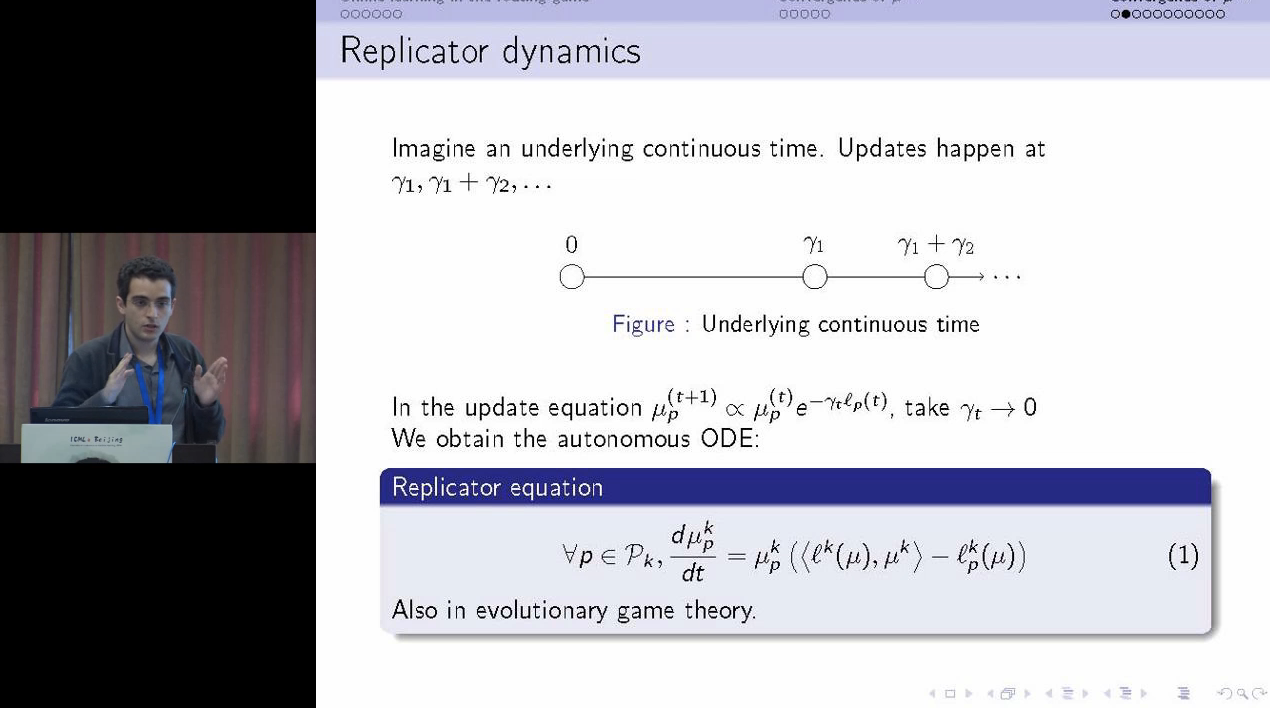

The routing game models congestion in transportation networks, communication networks, and other cyber-physical systems in which agents compete for shared resources. We consider an online learning model of player dynamics: at each iteration, every player chooses a route (or a probability distribution over routes, which corresponds to a flow allocation over the physical network), then the joint decision of all players determines the costs of each path, which are then revealed to the players.

We pose the following estimation problem: given a sequence of player decisions and the corresponding costs, we would like to estimate the parameters of the learning model. We consider, in particular, entropic mirror descent dynamics and reduce the problem to estimating the learning rates of each player.

In order to demonstrate our methods, we developed a web application that allows players to participate in a distributed, online routing game, and we deployed the application on Amazon Mechanical Turk. When players log in, they are assigned an origin and destination on a shared network. They can choose, at each iteration, a distribution over their available routes, and each player seeks to minimize her own cost. We collect a dataset using this platform, then apply the proposed method to estimate the learning rates of each player. We observe, in particular, that after an exploration phase, the joint decision of the players remains within a small distance of the set of equilibria. We also use the estimated model parameters to predict the flow distribution over routes, and compare our predictions to the actual distributions, showing that the online learning model can be used as a predictive model over short horizons. Finally, we discuss some of the qualitative insights from the experiments, and give directions for future research.

S. Samaranayake, W. Krichene, J. Reilly, M. Delle Monache, P. Goatin, and A. Bayen. Discrete-Time System Optimal Dynamic Traffic Assignment (SO-DTA) with Partial Control for Physical Queuing Networks.. Transportation Science.

bibtex

abstract

@article{samaranayake2018,

author = {Samaranayake, Samitha and Krichene, Walid and Reilly, Jack and Monache, Maria Laura Delle and Goatin, Paola and Bayen, Alexandre},

title = {Discrete-Time System Optimal Dynamic Traffic Assignment (SO-DTA) with Partial Control for Physical Queuing Networks},

journal = {Transportation Science},

year = {2018},

doi = {10.1287/trsc.2017.0800},

}

We consider the System Optimal Dynamic Traffic Assignment (SO-DTA) problem with Partial Control for general networks with physical queuing. Our goal is to optimally control any subset of the networks agents to minimize the total congestion of all agents in the network. We adopt a flow dynamics model that is a Godunov discretization of the Lighthill–Williams–Richards partial differential equation with a triangular flux function and a corresponding multicommodity junction solver. The partial control formulation generalizes the SO-DTA problem to consider cases where only a fraction of the total flow can be controlled, as may arise in the context of certain incentive schemes. This leads to a nonconvex multicommodity optimization problem. We define a multicommodity junction model that only requires full Lagrangian paths for the controllable agents, and aggregate turn ratios for the noncontrollable (selfish) agents. We show how the resulting finite horizon nonlinear optimal control problem can be efficiently solved using the discrete adjoint method, leading to gradient computations that are linear in the size of the state space and the controls.